Earlids: Audio Autonomy

A personalized auditory experience that addresses noise pollution. Unlike noise-canceling headphones, Earlids enable users to maintain audio awareness by replacing unpleasant noises with preferred sounds. Earlids represent a future where individuals control their auditory environment, potentially improving life quality in noisy settings.

Purpose: MDes Thesis

Timeline: 4 months

Tools: Max (software), Arduino, silicon, thermochromatic pigment, heating element

Guiding insights

Noise pollution is a worldwide problem.

Each year, New York City’s 311 complaint hotline receives more calls reporting noise pollution than any other issue.

The EU aims to reduce noise pollution by 30% by 2030, citing noise as “the second biggest health threat after air pollution”

Why do we have eyelids but not earlids?

Hearing is the only sense we cannot naturally moderate. While we can shut our eyes in bright environments, or limit breathing through the nose in the presence of offensive smells, we cannot mute environmental noises without manually plugging our ears.

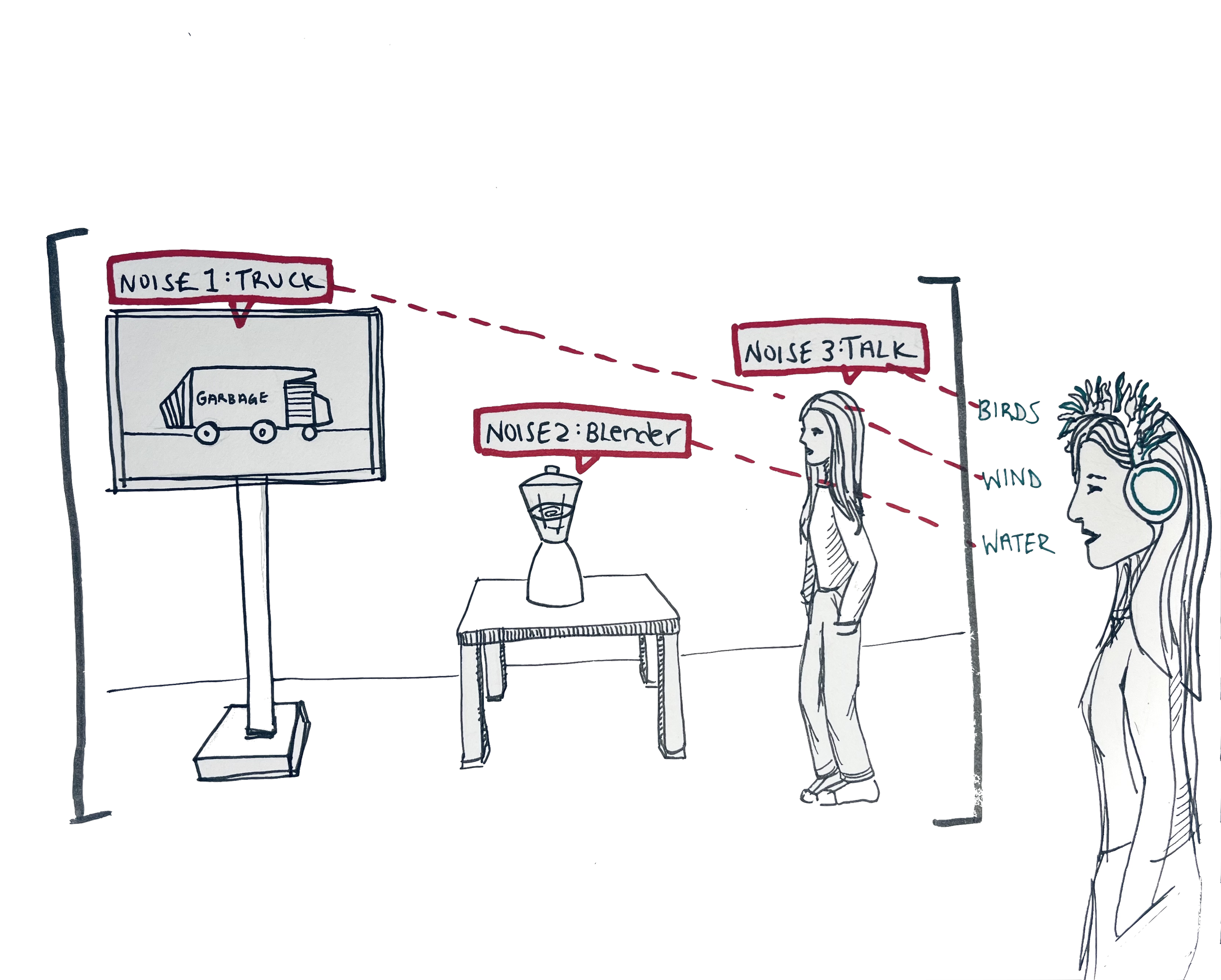

Noise replacement rather than noise cancellation

5 participants shared insights on the nuances of living in noise-polluted environments. These insights, as well as inspiration from existing solutions (loop earplugs, UW Semantic Hearing, Beoplay H95) inspired the creation of several conceptual prototypes.

“I hate the sound of the garbage truck, but it reminds me to take out the trash”

Insight: Noise-cancellation devices eliminate our ability to hear — to maintain audio awareness of our surroundings.

“After moving from NYC to a quiet suburb, the silence felt eerie”

Insight: ‘Noise pollution’ means different things to different people.

Walking past construction, you could opt to replace machinery sounds with nature sounds

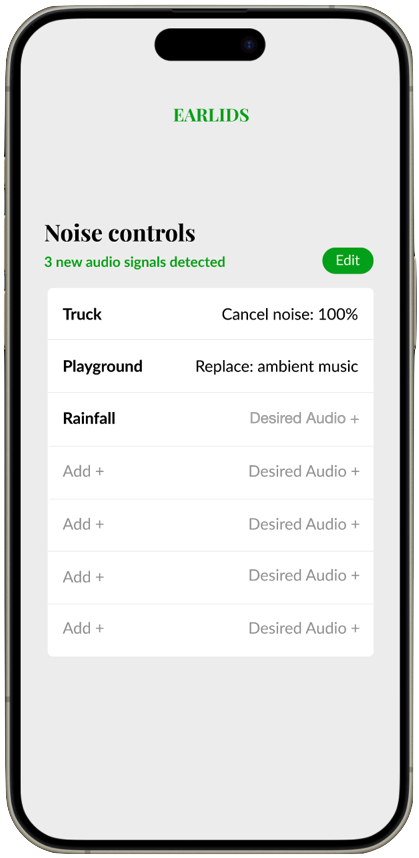

Prototype of an app to detect and personalize your soundscape

Choose replacement sounds from a thematic library to create a cohesive audio experience

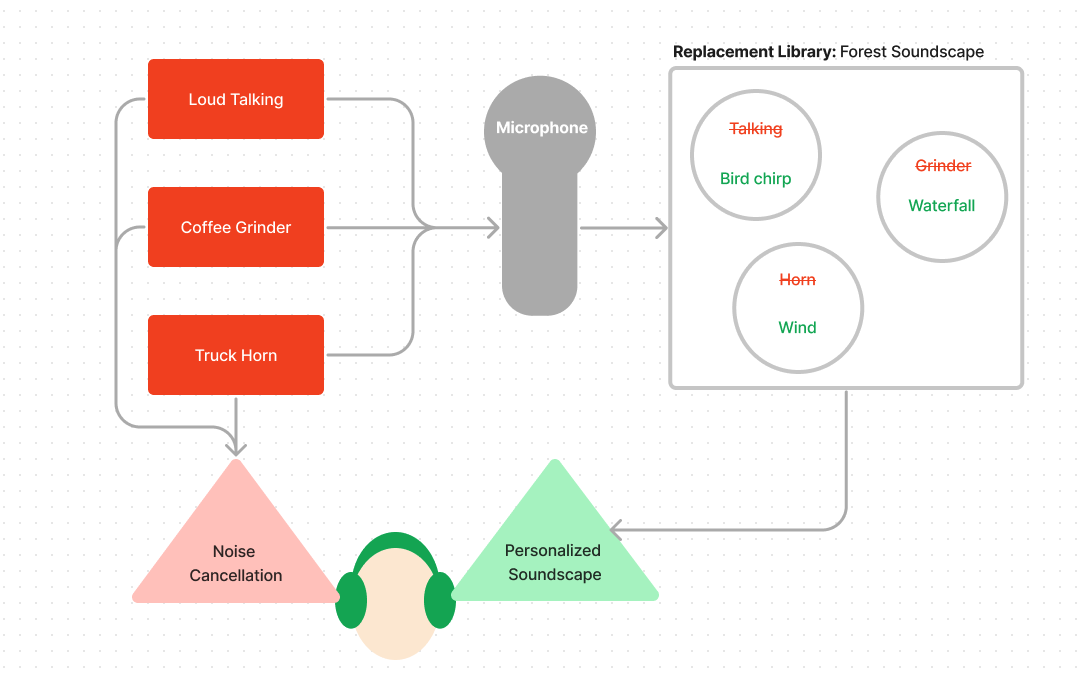

How it works

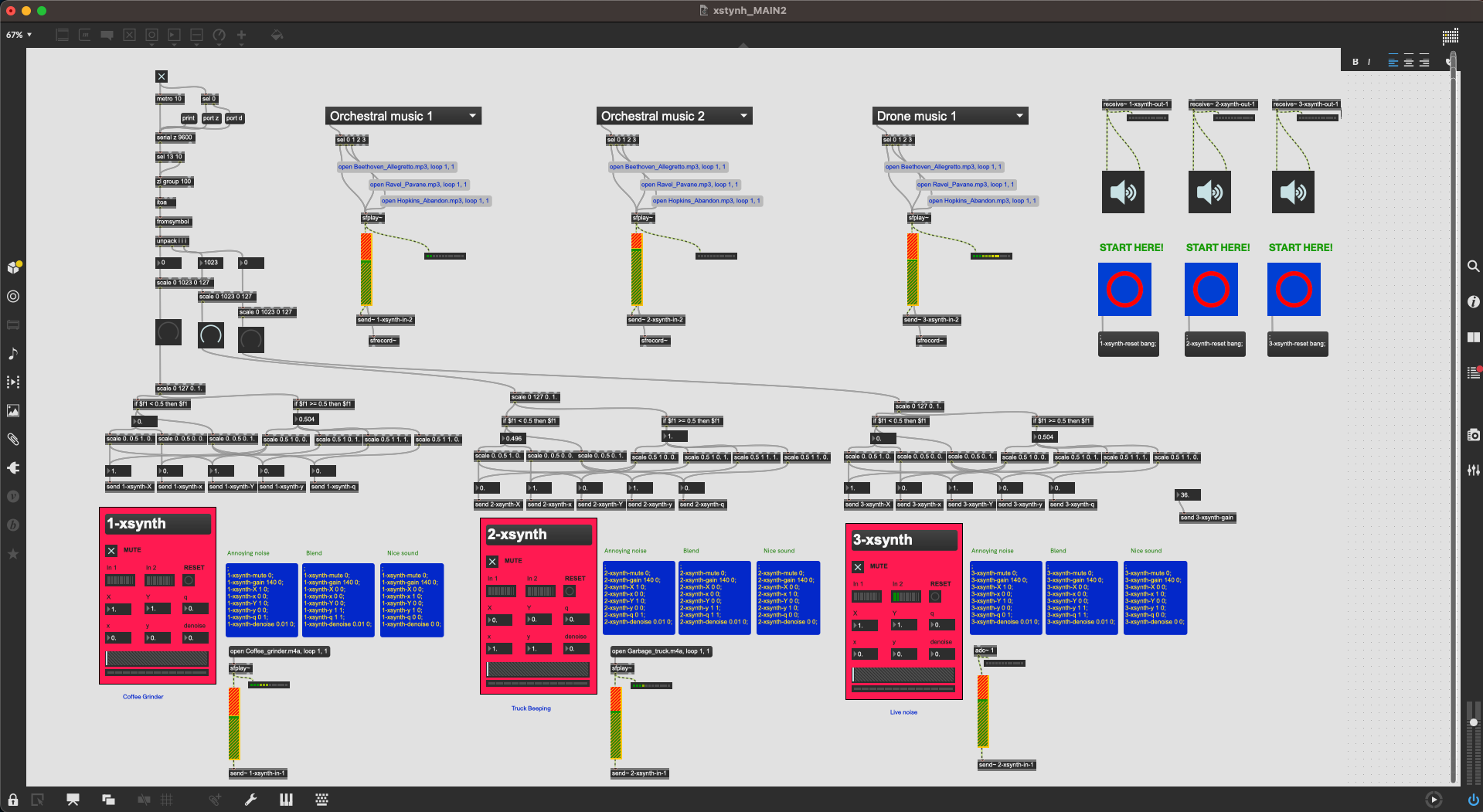

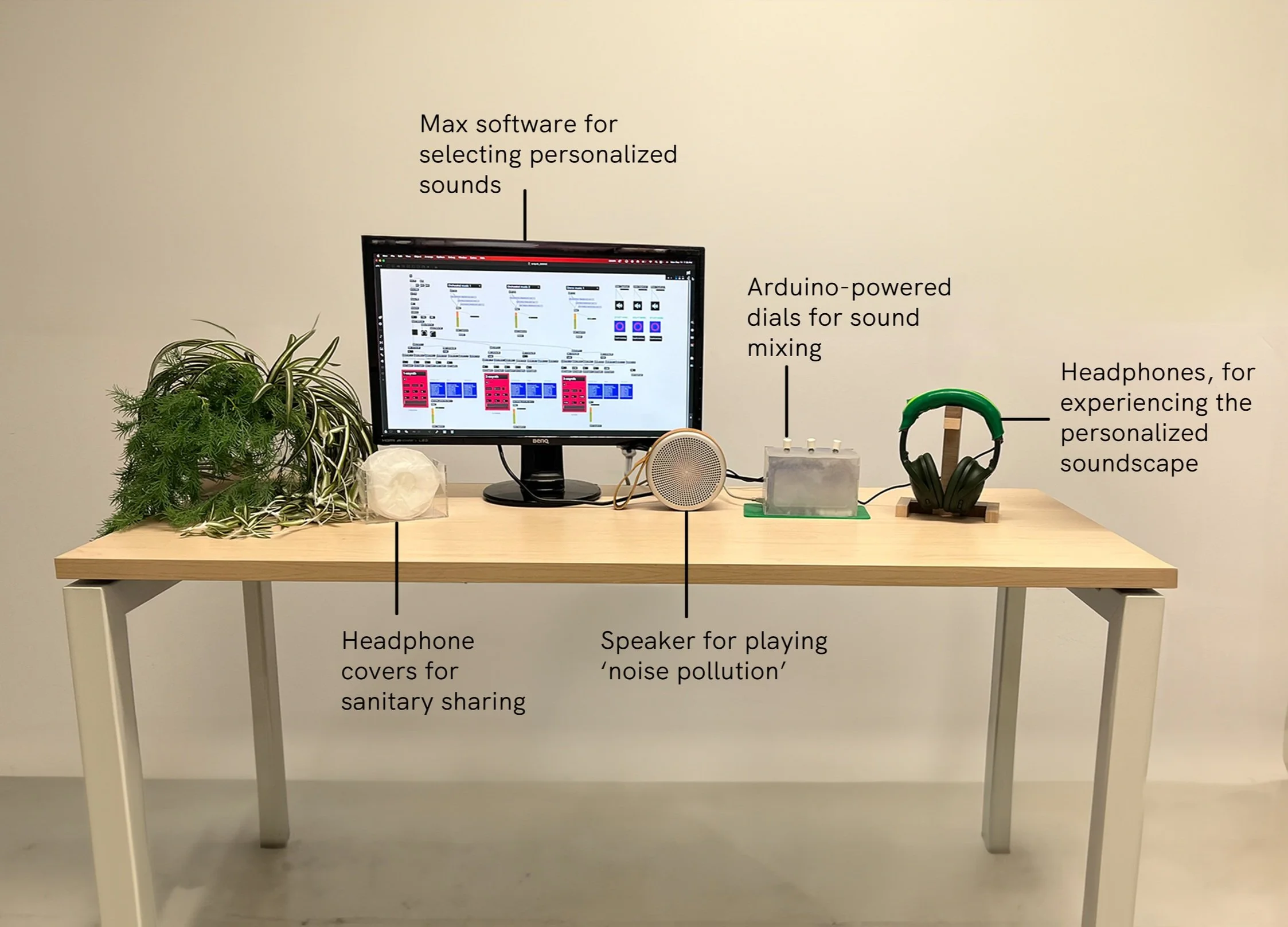

Noise-canceling headphones* mute all environmental noise. Max software detects environmental noises via the laptop microphone and passes the users’ preferred sounds through the headphones (i.e. nature or synth tones from a pre-loaded library).

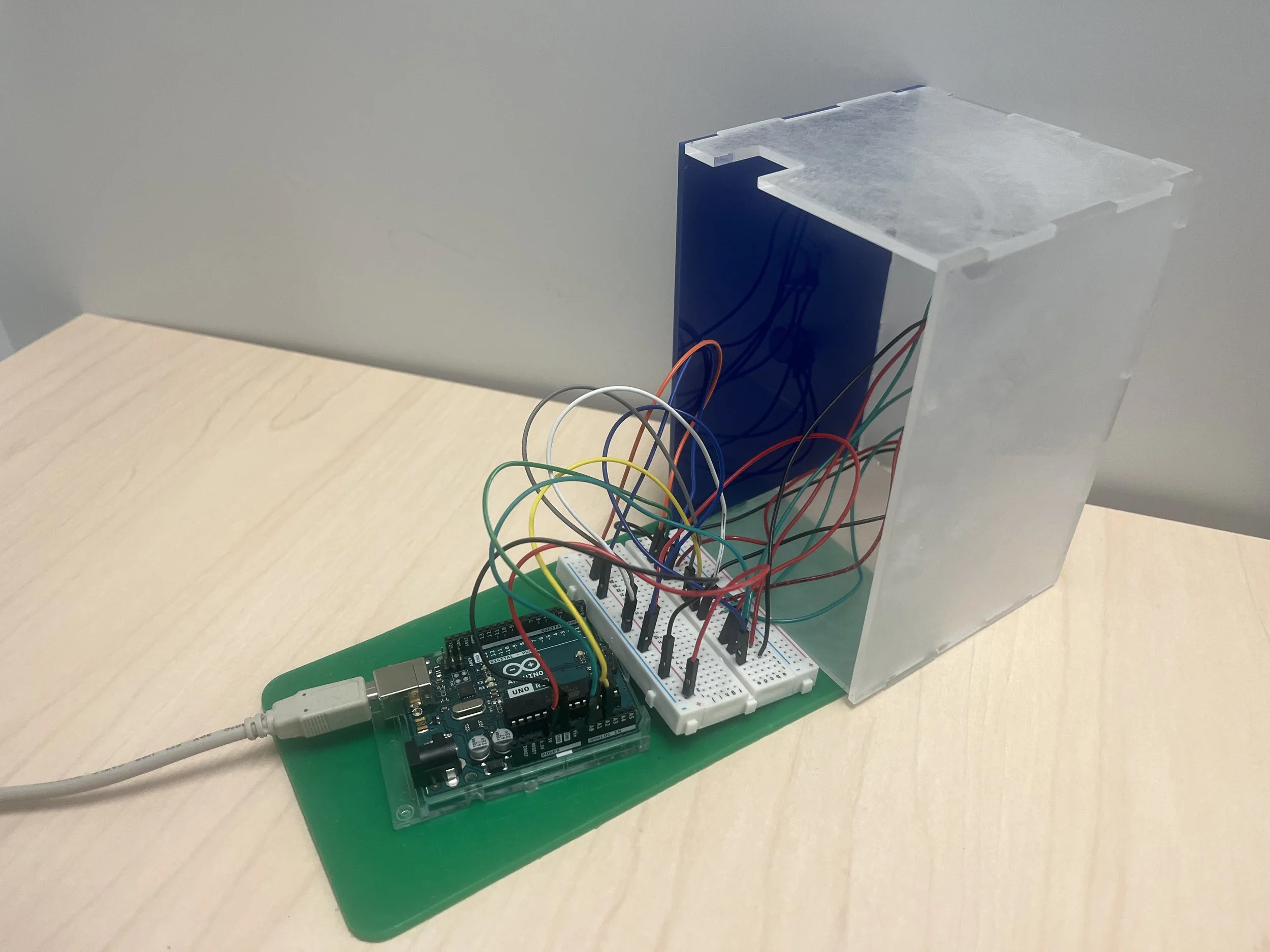

For the showcase demo, users could choose their preferred soundscape to fade in/out of real-world environmental noise via the Arduino-powered dial control box.

* I used Bose QC45 headphones because (1) they have strong noise-cancellation (2) over-ear headphone sanitary covers would allow multiple people to use the same headphones

The Max interface enabled users to explore mixing and replacing preferred sounds with the environment noises

Arduino control dials enabeled users to manually fade in/out of the personalized soundscape

Prototype Testing

To prototype the experience, I used Max MSP to create three soundscapes:

(1) Noise pollution (2) Nature sounds (3) Hybrid

Participants could listen to different nature recordings and toggle between the three states. Design decisions were made based on sound preferences and overall user experience feedback.

“I like the water sound, but if I replaced traffic with water, I’d think my apartment is flooding”

Design decision: More sound libraries were added to enhance personalization, including synth and orchestral sounds.

“The abrupt transition between noise and nature makes the sounds feel unrelated”

Design decision: Dial controls were added to enable a gradual transition from noise pollution to preferred sounds.

Social Design

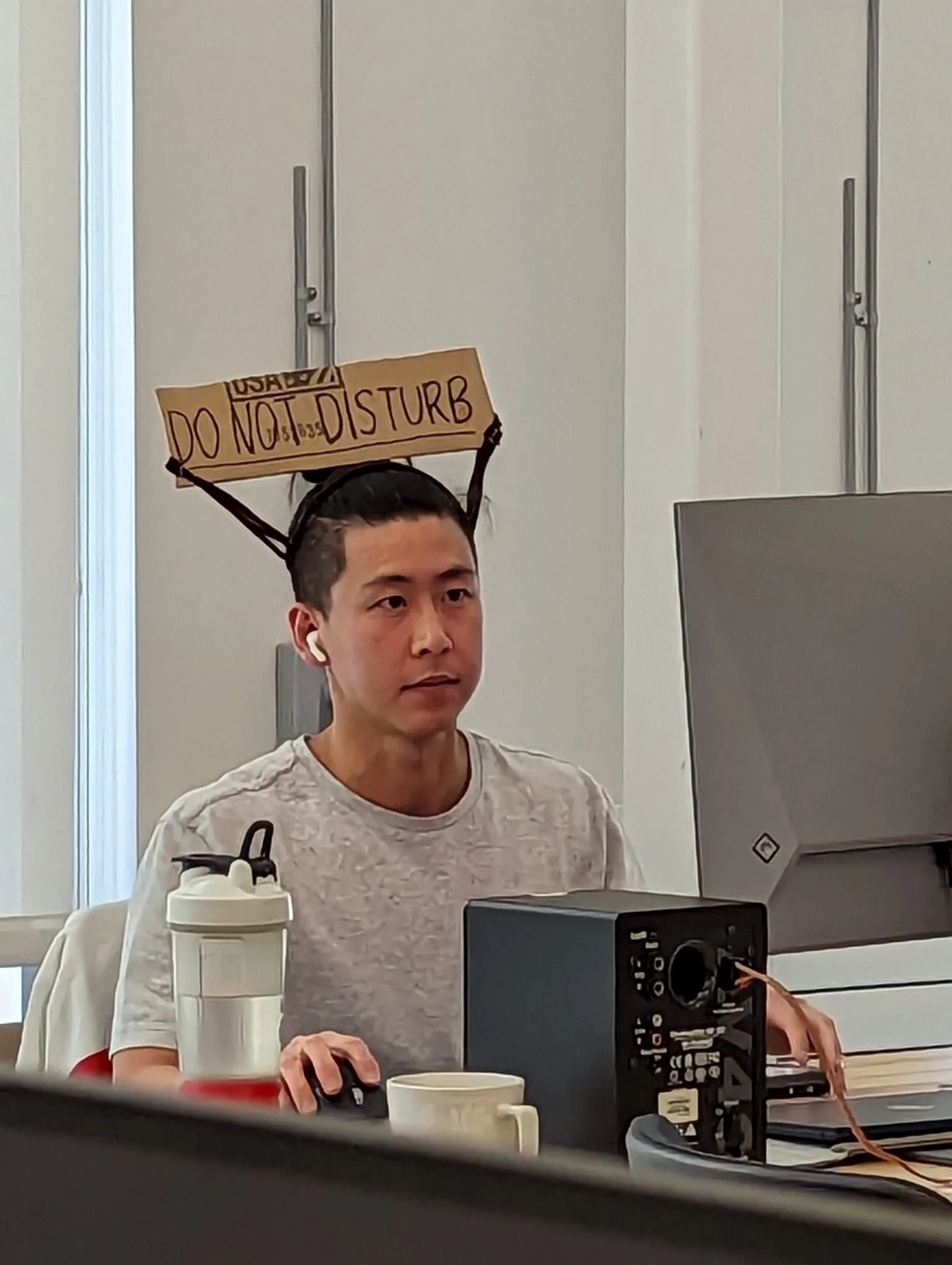

When wearing noise-canceling headphones, disturbances can be frustrating for both the wearer and the ‘disturber’. Acknowledging this norm, I explored material options to visually signal listening status (soundscape/focused state versus available state).

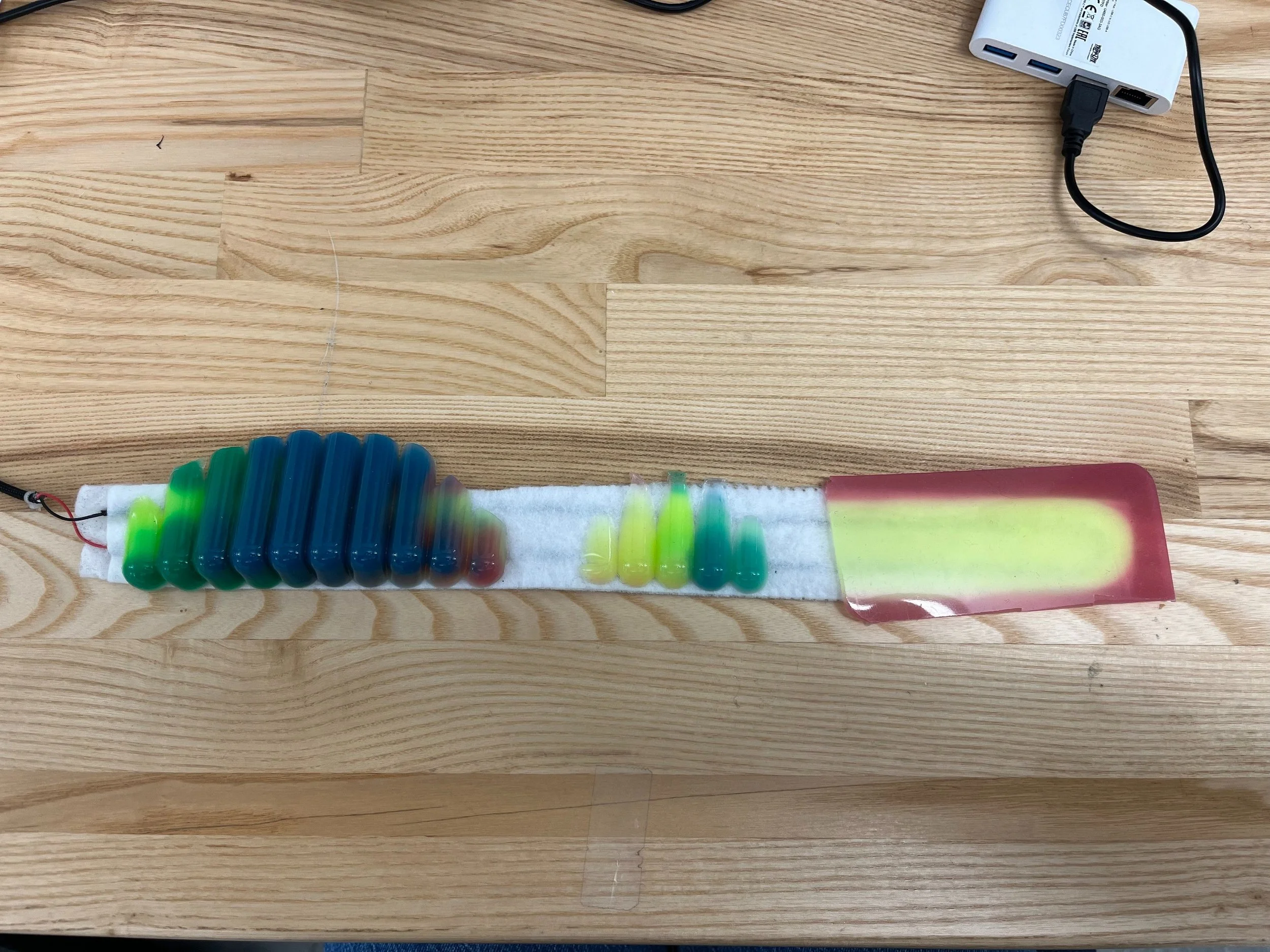

The final version included a silicon thermochromatic headband sleeve that allowed the user to nonverbally communicate their availability by changing headband color.

Wonjoon’s solution to this problem in the MDes studio inspired me to acknowledge this social problem.

A material exploration for visually signaling busy/available states. The prototypes were kept abstract to invite discussion and imagination.

Final experience

Presentation

Future work

This project accidentally inspired me to become an audiophile. I look forward to learning more about topics in audio innovation (semantic hearing, personalized soundscapes, noise pollution mitigation, psychoacoustics, audio computers…)

Areas to further develop this project:

Integrate a noise recognition algorithm to re-assign multiple sounds in real-time

Iterate on the interaction beyond the Arduino dials and Max interface (eg. explore voice commands)

Delve further into augmented reality soundscapes by considering contextual awareness (i.e. white noise for studying, sleep music… )

Special thanks to Carmine-Emanuelle Cella & Luke Dzwonczyk for helping me learn Max, and to the Berkeley faculty, classmates, and friends who gave feedback that helped shape this project!